Artificial Intelligence you can trust

Professor Ding Zhao developed a new course that trains engineering students how to make artificial intelligence trustworthy.

With its vast potential to address some of the world’s most pressing problems, Artificial Intelligence (AI) can be applied to healthcare, security, transportation, manufacturing, climate change, world hunger, and efforts to mitigate discrimination and inequality. But its rapid expansion is coupled with as much risk as reward. Before consumers will embrace self-driving cars or doctors will rely on a computer-generated diagnosis, AI developers are tasked with proving the trustworthiness of their innovations.

Engineers have a critical role to play in the application of AI, a field that might otherwise be considered the domain of computer scientists and programmers. Mechanical engineers are particularly skilled for this task because they connect people to the physical things that they need to interact with the world around them. This world is governed by laws of physics that engineers understand and apply in order to develop safe and reliable devices and complex systems. And engineers have the experience of analyzing data to integrate and optimize these systems.

Carnegie Mellon University’s Department of Mechanical Engineering is preparing future engineers to not only use AI as a tool for problem solving, but to solve the challenges that arise with the integration of AI.

In the newly created Trustworthy AI Autonomy course, Ding Zhao, an assistant professor of mechanical engineering is focused on teaching students how recent advances in artificial intelligence and real-world cyber-physical autonomy can ensure safety by providing explainable, reliable and verifiable results.

There was no such course as this, and it’s a very important aspect of AI. There are areas where every failure will attract the attention of the world.

Ding Zhao, Assistant Professor, Mechanical Engineering

“There was no such course as this, and it’s a very important aspect of AI,” said Zhao who added that, “There are areas where every failure will attract the attention of the world.”

Zhao set two primary objectives for the course. He aimed to help students develop a high-level understanding of trustworthy AI autonomy. And he wanted to provide students with experience in developing full-cycle research capabilities including preparing research proposals, writing and reviewing papers, making presentations, and drafting academic publications. The course also helped students identify a direction for their future studies and develop the expertise needed to be globally competitive.

“I am very impressed by how fast the young generation learns the new knowledge and how innovative they could be,” said Zhao. “Most of the papers I assign were published in the last two years, some published a few weeks ago. The students have the guts to reach the frontier of the human knowledge and use the new tools to solve a variety of big problems with their diverse backgrounds.”

The course began with a review of the fundamental knowledge for trustworthy AI autonomy, including adversarial attack and defense techniques, statistical modeling, related machine learning applications and robust evaluation. Students were also assigned an extensive series of readings on innovative, state-of-the-art trustworthy AI research. And finally, the students worked in groups on research projects that were presented to a group of judges from the university and industry experts from Toyota, IBM, Bosch, Denso, and Cleveland Clinic.

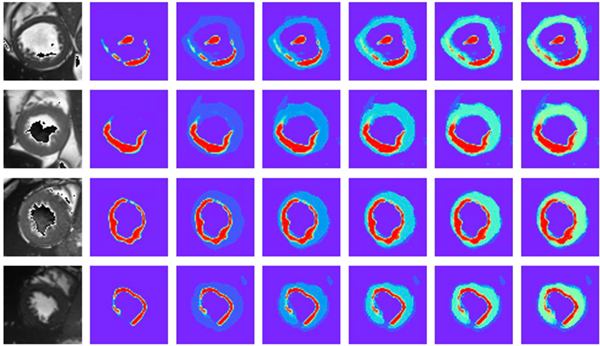

Sameer Bharadwaj, a first-year mechanical engineering master’s student, together with fellow students Shiqi Liu, and Shengqiang Chen, researched methods to apply reinforcement learning to cardiac image segmentation. The team sought to improve the accuracy and robustness of Cardiac Magnetic Resonance (CMR) image segmentation—a technique that is an otherwise difficult and time-intensive, yet crucial method for medical professionals to diagnose cardiovascular disease.

The team used an existing dataset of magnetic resonance images of 100 patients to both train and test their method, which ultimately allowed them to segment the CMR images with a higher accuracy rate compared to the state-of-the-art deep learning-based methods.

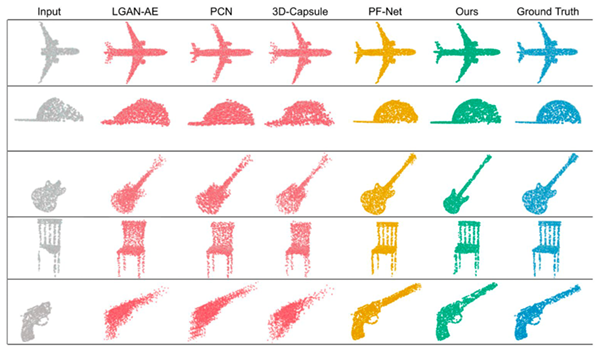

Beyond such specific and practical AI applications are a broad range of more general threats associated with AI such as those that pose cybersecurity risks that can lead to physical and/or digital harm. Louise Xie, a first-year Ph.D. student in mechanical engineering, along with student team members Yihe Hua and Yao Lu investigated ways to improve the security and robustness of algorithms in order to defend against adversarial attack.

The ability to identify potential adversarial examples is a key to developing trustworthy AI. The team developed a method to trick the victim algorithms to incorrectly identify the point cloud Lidar measurement of an airplane as another object such as a car, piano, or cup. Different from common cybersecurity methods that perturb the point cloud, the team presented a novel attempt to bridge the security attack methods with real-life connections to the physical world, such as applying the law of aerodynamics. Both Bharadwaj and Xie agreed that the course significantly advanced their knowledge of trustworthy AI autonomy and provided them with more clear directions in applying such AI tools and techniques to their future work.

“The coursework and readings showed that the biggest problem in AI is that if the research algorithms are not robust enough for deployment, it creates a hesitation to examine or adopt new technologies,” said Xie.

“We learned many tools to make the AI algorithms more robust and that takeaway is a good foundation for continuing this work,” said Xie.

Bharadwaj, who was tackling his first masters level course remotely from India, was impressed with both the collaboration with his team and the support he got from Zhao and his teaching assistant, Mansur Arief. He says that reading the 25 papers helped him understand how vast this field is and taught him the fundamentals needed to apply this knowledge. Most importantly, though, he believes he now has a better idea of the areas of study he wants to pursue.

“I now have a much clearer view of what I want to learn and how the use of AI in the medical field can help doctors save lives by using these technologies to detect and diagnose disease,” said Bharadwaj.