Laboratory for Intelligent Interactive Real-Time Computing Systems (LINCS)

The Laboratory for Intelligent Interactive Real-Time Computing Systems (LINCS) has developed over 30 generations of novel and intelligent wearable computer systems, several generations of virtual coaches, and personal cognitive assistants.

Paradigm shift research: from context aware wearable computers to virtual coaches and cognitive assistants

This laboratory has created over 30 generations of Wearable Computers which have become cornerstone of the wearable computing field. By applying and refining our rapid prototyping methodology, the Lab has introduced wearable computers in a number of previously untried application areas. Maintenance and inspection, augmented manufacturing, real-time speech translation, navigation, context aware computing, and group collaboration have all been explored using wearable computers, and a variety of research has been performed to understand the principles underlying these designs. While the complexity of the prototype artifacts has increased by over two orders of magnitude, the total design effort has increased by less than a factor of two. Among multiple awards and recognitions we would like to emphasize three prestigious international design awards have been received for our visionary designs: VuMan 3, MoCCA, and Digital Ink, along with multiple awards for best paper.

Virtual coaches

Virtual Coaches represent a new generation of attentive personalized systems that can continuously monitor its client’s activities and surroundings, detect situations where intervention would be desirable, offer prompt assistance, and provide appropriate feedback and encouragement. Virtual Coaches are intended to augment, supplement and, in some circumstances, be a substitute for a clinician by offering guidance beyond and between episodic office visits. Sensors, combined with machine learning, can determine a user’s context, their location, physical activity, emotions, and social context. Several advanced capabilities have been developed, such as automatic goal adjustment, scoring functions, exercise smoothness evaluation, and emotion recognition.

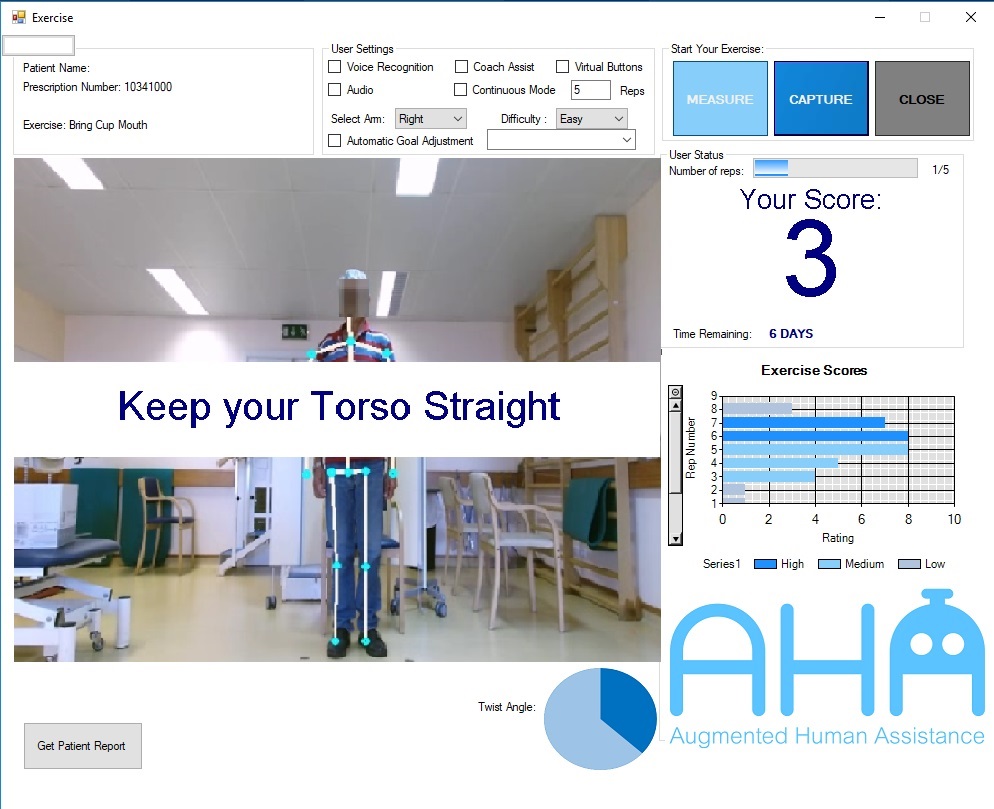

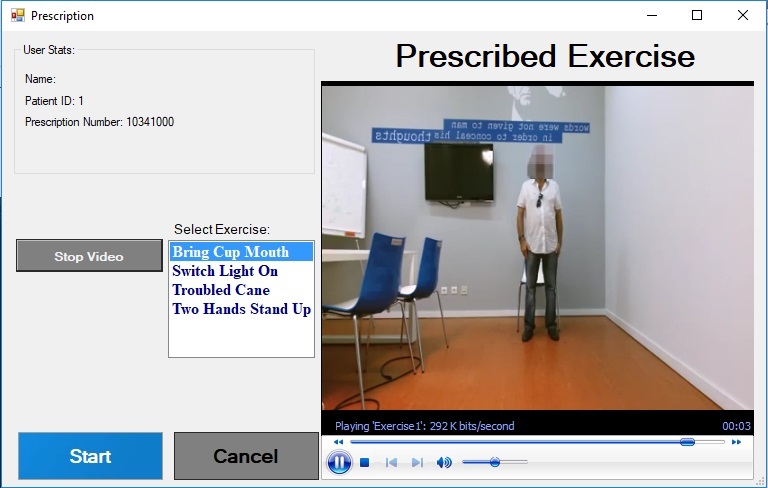

The Virtual Coach for Stroke Rehabilitation Therapy in home use benefits from our experience in building and deploying over a dozen virtual coaches, several of which are for physical rehabilitation. This Virtual Coach evaluates and offers corrections and feedback for rehabilitation of stroke survivors. The Virtual Coach is composed of a tablet, a Kinect for monitoring motion, and a machine learning model to evaluate the quality of the exercise. Based on a set of rehabilitation exercises established by clinicians we determined the correct and most typical erroneous exercise postures and movements. The technologies support self-monitoring and sharing of progress with health-care providers, enabling clinicians to fine-tune the exercise regimen. The broader impact is the transformative effect this will have on at-home rehabilitation and maintenance exercises, as well as the potential applicability to other domains where repetition is needed to learn a skill.

Our technology has the potential to encourage more frequent and engaging rehabilitation exercises that would contribute to better patient outcomes, faster recovery and fewer readmissions to hospitals. We have developed a game suite related to the stroke rehabilitation to increase user’s motivation. MS Kinect is used for detecting user’s motions. Each game relates to a prescribed exercise. For example, “Fishing” game corresponds to a bring a cup to the mouth exercise.

A related example is the Virtual Coach for Rehabilitation with Myoelectric Prostheses for Amputees, which is also designed to be used by a patient at their home following an initial tuning session at the clinic. The goal is to increase the functional abilities of amputees and improve the user’s neuromuscular control through targeted pre-prosthesis rehabilitation protocols. The Virtual Coach utilizes EMG signal detection and interpretation methods to facilitate execution of advanced clinical training protocols via an interactive engaging video game. For individuals who undergo partial arm amputations, robotic myoelectric prosthetic devices can allow them to recover a great deal of their arm and hand functionality. A significant challenge in adapting to a prosthetic device is learning to use their brain to control the device effectively and safely. We use a skin EMG reader and MS Kinect to help provide feedback to users learning to use a prosthetic device. We have developed some machine learning tools to determine what feedback to provide to a user performing physical therapy exercises to help them learn to use their prosthetic device correctly.

Cognitive assistants

Cognitive Assistant can provide guidance, user feedback and error correction based on user progress. Its successful implementation, for example, can greatly reduce training time for equipment operators and increase safety as well as success rate. We have developed cognitive assistance that can help elderly person to perform their vital signs measurements. Another example is our automated help-desk with remote expert assistance to new operators in cargo airlift aircrafts